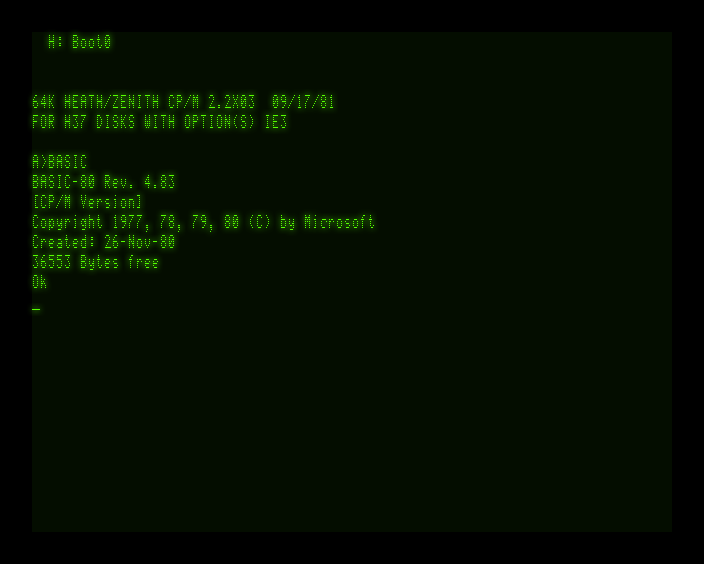

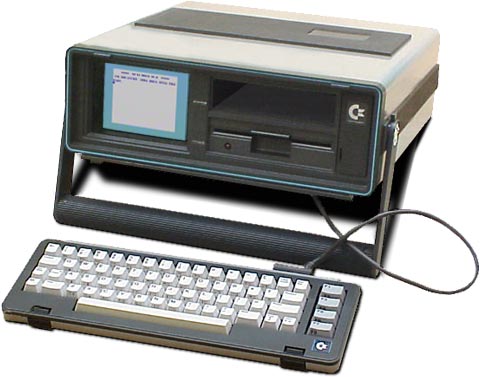

BASIC?! Says who? I had to relearn programming! Worse, after using BASIC on a Northstar Horizon for about a year, military obligations put my programming on hold. (But I knew that I had found my niche.) In 1983, I bought a Commodore C64 with built-in '8K BASIC'. I soon expanded this with Simons' BASIC (which took up even more of my precious RAM), then Business Basic, which was on a cartridge, freeing up some RAM, but still couldn't do the things I wanted to do, which, at the time, was a simple music database, but with a pretty advanced (for the time) user-interface, and double- and triple-duty fields. Little did I understand back then that the rudimentary development tools were as much a hindrance as the languages themselves. But I did later learn that those recycled fields that I thought I'd so brilliantly thought up were a standard tool in the bag-of-tricks of major software firms, like IBM.

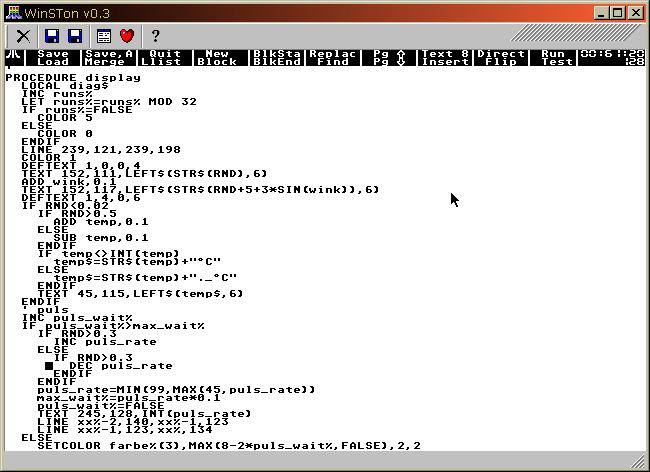

I upgraded to an Atari 520ST and GFA BASIC in early 1986. This machine had much more room, and GFA Basic had more commands, more speed, more power overall. What I didn't have, though, was access to the GEM GUI's APIs. Still, I could finally write the code I wanted. Sort of. The database part was proving a bit challenging, and the development tools, while much better than the C64's, were still pretty weak, but in ways that I didn't yet fully understand.

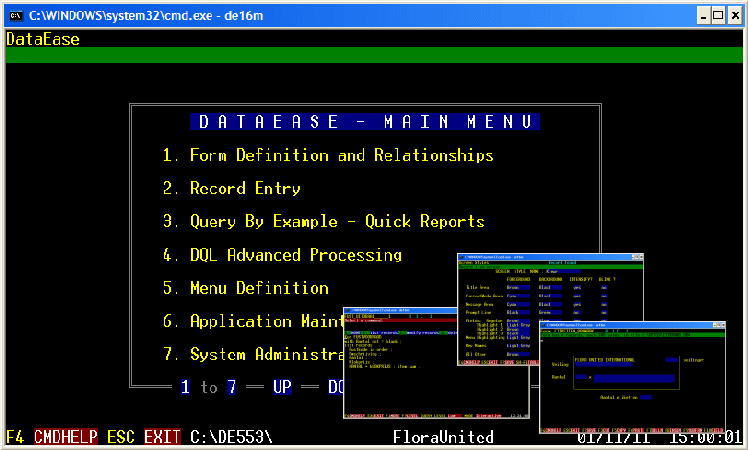

I found myself working an internship at Daimler, programming in Fortran and DataEase. I really liked DataEase. I really hated Fortran. With DataEase, I wasn't really programming, but, OH!, the things I could do! It was one of the first RADs, and it was my first clue as to what was missing. But my access to it ended right along with my internship.

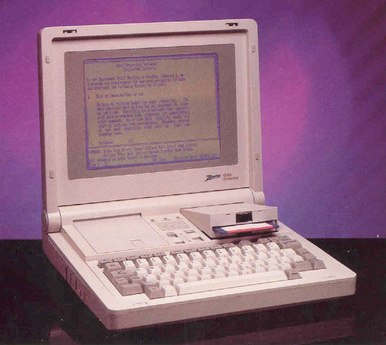

There was a software firm in our building, and I got to spend some time with the owner who told me that they were moving to C, so I borrowed some books from them, gave up on the 'hobbyist' computers, bought a PC of my very own, actually a laptop (I've been using laptops since their introduction), and started learning C. (FINALLY!) (I'll bet you thought this article was going to turn out to be all about Basic.) That was even more challenging than learning Basic after machine code. I'm not known for being slow, but C seemed to me like some weird form of cross-platform assembler, and I'd already rejected assembler along with machine code, and I just wasn't getting it. I'm no masochist. Nor do I plan on making my pilgimage on my knees. The authors swear it was not their intent to create a cross-platform assembler, but, honestly, I just couldn't see any other reason for its existence, so I'm skeptical. I thought it was stupid, so I set it aside, and turned to the GW-Basic that came with the machine.

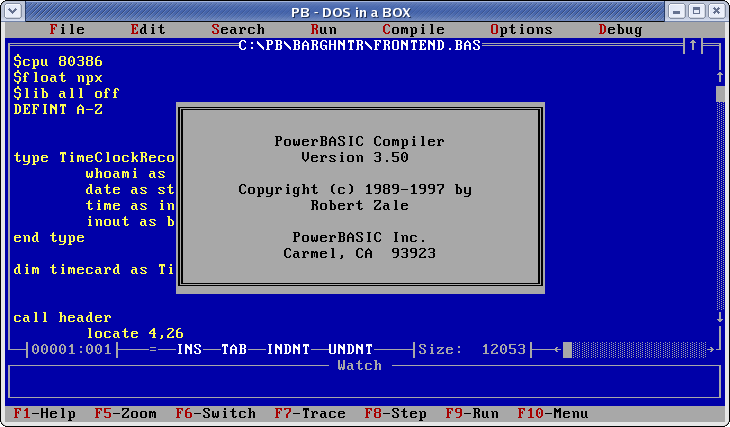

But I didn't like that much more than the Commodore Basic. All I really got was more RAM even than my Atari had, a bit more speed, and a few more commands. It helped me make more readable/maintainable code, but the only really bright spot turned out to be the standards. What I wrote, others could run. That helped a lot! I had helped finance my education by writing software for the mosquito-fleet of Sharp and Casio handhelds that were so popular with students (and engineers, as it turned out). And I'd come to really appreciate microcassette tape-drives, and standards, but development tools, especially on those things, were still in the stone-age, so I tried TrueBASIC. Can't be more standard than that, eh? Guess again! Not knowing what I had, though, I left it for TurboBasic, which soon became PowerBasic.

Now, granted, with Turbo/PowerBasic, I was finally able to write some really cool things. My best had to have been a spreadsheet I wrote for my electronics engineering classes that could switch between polar and vector math, something no spreadsheet that I know of today can do. The dean even wound up appropriating it to create test problems that actually worked (since his own didn't). (Oooohhh.... that guy! I spent an entire holiday weekend trying to solve one of his makeshift textbook problems, only to have him confess, "Oh... Yeah... That one. No, it doesn't work. I forgot to give you the reluctance. You need that to solve it." 'Scuze me?! I'm paying how much for this class? I want a refund!)

But, as my projects grew in complexity, I began to see what pundits and experts were all telling me were the shortcomings of Basic. Chief among these was that I really needed to combine string and integer arrays. But how? (Stupid me!) (The answer was so simple!)

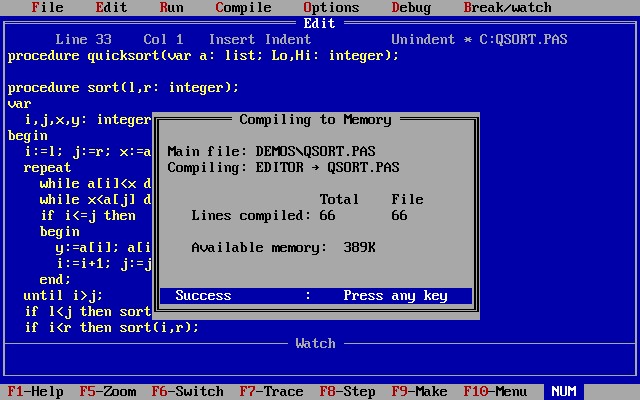

Then, I tried Pascal. With Pascal, I was writing really cool programs. I wrote a student tracking application for the software engineering department that almost got me expelled. It started out as the class project in CS101 (I gave up on EEE!). I could see where they were going, so I jumped the queue, and wrote the whole thing ... and then some. Its crowning feature was a cursor-key rotated 3D graph that allowed bars, pyramids, dots, and ribbons in a box whose 3 user-facing sides vanished as they came around the corner, and whose 3 other sides were opaque, graduated, and bore a shadow of whatever graph appeared out in the open space of the box. THAT ... was a lot of math! I couldn't believe it when I found myself accused of trying to pass off a Lotus 123 macro as my own project. I'd never even used Lotus 123! But, all I had to do was prove to the dean that it was really all my own code (written in a single weekend, no less) by compiling it on his system in his office. By the time I left school, they were still using it themselves to track their students.

Then I started working at WordPerfect, and was right back in assembler. But ... Wow! ... what they were doing with assembler ... I was impressed. When the Windows product (a story in itself) finally reached the international division (where I worked), I was back in C again. And not just C, but C++, which I'd never used, so I took a few classes in OO, using SmallTalk and C++, at the local state college. And off to the races we went! I wrote a TSR (remember those?) for the text-based programs so we could identify to the core developers exactly which character, from which code page, was appearing at what position on which screen. (It was a big problem back then.) I was even getting called up to the core developers' building (Building C) (Get it?) to help one of the programmers with his C. So, I was pretty good at it, and the development tools had finally matured into something usable, but I still didn't like C any more than before. I found it ... well ... silly. Unnecessarily complex. Almost like someone made it more complex for no other reason than to be difficult. I mean, why the semi-colons? Basic didn't need them. They were just superfluous. Yeah, yeah, yeah. I know. Believe me. I've taught classes in that very thing. But, like I said, Basic manages without them. So ...?

That's when a friend introduced me to the April first Vogon News Service Release which read:

|

CREATORS ADMIT UNIX, C HOAX Jim Horning Tue, 4 Jun 91 11:41:50 PDT >>>>>>>>>>>>>>>> T h e V O G O N N e w s S e r v i c e >>>>>>>>>>>>>>>> Edition : 2336 Tuesday 4-Jun-1991 Circulation : 8466 VNS TECHNOLOGY WATCH: [Mike Taylor, VNS Correspondent] ===================== [Littleton, MA, USA ] COMPUTERWORLD 1 April CREATORS ADMIT UNIX, C HOAX In an announcement that has stunned the computer industry, Ken Thompson, Dennis Ritchie and Brian Kernighan admitted that the Unix operating system and C programming language created by them is an elaborate April Fools prank kept alive for over 20 years. Speaking at the recent UnixWorld Software Development Forum, Thompson revealed the following: "In 1969, AT&T had just terminated their work with the GE/Honeywell/AT&T Multics project. Brian and I had just started working with an early release of Pascal from Professor Nicklaus Wirth's ETH labs in Switzerland and we were impressed with its elegant simplicity and power. Dennis had just finished reading `Bored of the Rings', a hilarious National Lampoon parody of the great Tolkien `Lord of the Rings' trilogy. As a lark, we decided to do parodies of the Multics environment and Pascal. Dennis and I were responsible for the operating environment. We looked at Multics and designed the new system to be as complex and cryptic as possible to maximize casual users' frustration levels, calling it Unix as a parody of Multics, as well as other more risque allusions. Then Dennis and Brian worked on a truly warped version of Pascal, called `A'. When we found others were actually trying to create real programs with A, we quickly added additional cryptic features and evolved into B, BCPL and finally C. We stopped when we got a clean compile on the following syntax: for(;P("\n"),R-;P("|"))for(e=C;e-;P("_"+(*u++/8)%2))P("| "+(*u/4)%2); To think that modern programmers would try to use a language that allowed such a statement was beyond our comprehension! We actually thought of selling this to the Soviets to set their computer science progress back 20 or more years. Imagine our surprise when AT&T and other US corporations actually began trying to use Unix and C! It has taken them 20 years to develop enough expertise to generate even marginally useful applications using this 1960's technological parody, but we are impressed with the tenacity (if not common sense) of the general Unix and C programmer. In any event, Brian, Dennis and I have been working exclusively in Pascal on the Apple Macintosh for the past few years and feel really guilty about the chaos, confusion and truly bad programming that have resulted from our silly prank so long ago." Major Unix and C vendors and customers, including AT&T, Microsoft, Hewlett-Packard, GTE, NCR, and DEC have refused comment at this time. Borland International, a leading vendor of Pascal and C tools, including the popular Turbo Pascal, Turbo C and Turbo C++, stated they had suspected this for a number of years and would continue to enhance their Pascal products and halt further efforts to develop C. An IBM spokesman broke into uncontrolled laughter and had to postpone a hastily convened news conference concerning the fate of the RS-6000, merely stating `VM will be available Real Soon Now'. In a cryptic statement, Professor Wirth of the ETH institute and father of the Pascal, Modula 2 and Oberon structured languages, merely stated that P. T. Barnum was correct. In a related late-breaking story, usually reliable sources are stating that a similar confession may be forthcoming from William Gates concerning the MS-DOS and Windows operating environments. And IBM spokesman have begun denying that the Virtual Machine (VM) product is an internal prank gone awry. {COMPUTERWORLD 1 April} {contributed by Bernard L. Hayes} >>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>>> Please send subscription and backissue requests to CASEE::VNS Permission to copy material from this VNS is granted (per DIGITAL PP&P) provided that the message header for the issue and credit lines for the VNS correspondent and original source are retained in the copy. >>>>>>>>>>>>>>>> VNS Edition : 2336 Tuesday 4-Jun-1991 >>>>>>>>>>>>>>>> |

|

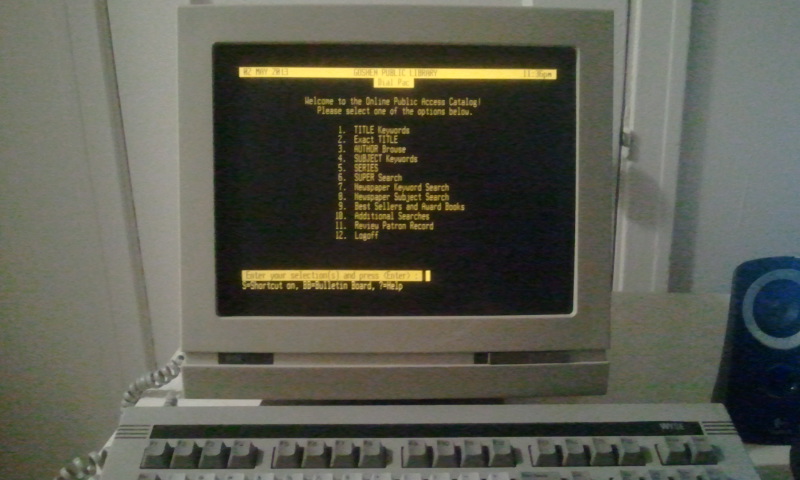

Now, Unix was developed in C. We all know that. So C can't be a joke. Right? RIGHT?? Besides, this was released on April Fool's Day. It has to be the joke. But it really bothered me. It didn't FEEL like a joke. And I wasn't laughing. It felt more like satire. It hit home. It hurt. And I was already seeing the cracks. Java was already rising, and I knew full well why: C was too bizarre, too arcane, all the many books notwithstanding. And, speaking of books ... There were literally volumes written about programming style, and they were mostly about C/C++. How many Basic or Pascal style guides have you seen? And then the realization that we weren't really programming in C or even C++ so much as we were programming in OWL, MFC, and then in the particular style of the particular shop we worked in. I expected this of assembler, but C?! Remember what I said about it really being assembler? Some guy even came up with a .h that turned his C code into jive. 'Dis hee ho smack dat ho dere ...' OMG! And every grad student had had his way with Unix! I mean, have you ever seen the names of some of those programs? Archie, Veronica, Jughead, ... No wonder java was gaining a following. Java was straight-forward. Well, relatively. Up until all the coffee jokes that just made no sense. Java Beans, anyone? And write once, run anywhere was beginning to look pretty darned brilliant. And C's OO extensions didn't really fit, either. (More on that another time.) OO on C was, is, a torturous journey. I had seen very experienced programmers struggling to master C, let alone C++. I had seen product development grind to a halt, and never recover, just like that 'joke' said. Nothing happened quickly anymore. Not like before. Major features, if not major releases, used to appear every year. Major software firms were beginning to abandon version numbers, and adopt the auto-industry's long-standing practice of distinguishing their models by year. But no more. Eunichs ... I mean Unix ... It was all becoming clear now. C really *WAS* a hoax! No. C *IS* a hoax! C was a red-herring released into the software development industry, clearly intended to require more, not fewer, programmers. Maybe. And maybe its real purpose was just to slow the pace of development. C is the proverbial monkey-wrench thrown in the machine. This wasn't just a hoax, it was a conspiracy. I'd almost have to start suspecting the Russians were they not every bit as deluded as the rest of the world. And all the pundits, always eager to tell people what they want to hear, only fanned the flames that have been consuming the industry ever since. I should have known something was wrong when I started trying to introduce myself to OO by reading their perspectives on it. CLUELESS! All of them! Just a bunch of ink-slingers! And some of your favorite software packages of yore? Some of your favorite firms? Where are they now? Gone! Why? C++! That's why! And I should know! I was a front-row witness to it all. Fortunately for me, a new programmer was hired in WordPerfect's international division not long before I left, and he used VisualBasic for a semi-personal, self-assigned project that he completed, after hours, in a few days. It was something I'd done in PowerBasic console mode a couple of years earlier, but his was in Windows. Cool! I couldn't program in Windows. Yet. There was another programmer in the department who'd been hired just to do this very thing 'professionally' (because the department head had an attitudinal problem with accepting and using mine) in FoxPro, but his entire project was just obviated right before my very eyes, and that in mere hours, using VB. I was, again, impressed. I'd only heard of VisualBasic, and never even seriously considered it. After all, not only was I now a Borland/WordPerfect C/C++ programmer, I knew the dark secrets of M$. I was privvy to the bogus SDK and all that stuff. What would I want with anything from Microsoft, let alone M$ Basic? That would have been a step backward, right? But, I bought his copy of VB from him, worked through the tutorial on a Saturday afternoon, and was, once again, writing cool things. I immediately wrote an application to help me with my bicycle designs. It quickly allowed me to choose and graph my gear-ratios, pick the proper spoke-lengths for the various cross-patterns I wanted to try, calculate my ideal frame geometry, stem, seatpost ... I had it all in a few days, and it was glorious. What was the difference? I couldn't quite put my finger on it yet, but quickly ascribed it to the fact that I had more experience with Basic than with C. I was wrong. I started questioning everything I knew. WordPerfect was beginning to founder by then, and a friend who'd already been laid off called me from Dynix. He didn't like his new position (too technical for him) (Mac 'programmer', doncha know), and got a new (less technically intensive) one elsewhere, but didn't want to abandon his brother (who'd hired him) to an awkwardly empty desk, so decided to see if I wanted to beat the rush. Good timing. I did. And I soon found myself in an entirely different world: The world of host-terminal computing (again) (after an eight-year absence), enterprise-scale system and application design, multi-user, multi-threaded architecture, millions of times more data than code, and Pick/Multivalue programming.  I didn't get to program right away. I had to learn the platform first, then their application, then their clients' systems, their support system, ... There was a lot to learn. But, when I finally started programming, I once again found myself writing pretty cool things in little time. VERY little time. I wrote a fix for their serials module, correcting corruption that was being introduced by users misusing the software, which wasn't really that hard to do. It took me maybe ten times as long to analyze as it did to design, develop, test, and deploy the code. I even showed them how to distribute it to other clients' systems in about 1/1,000th the time they had been doing it (not really too hard, considering the magnetic-tape/FedEx shuffle was the only dance-move they knew). (HyperTerm?! ProComm?! What's that?) More importantly, I had discovered the difference I had been looking for. The problem wasn't just with C; it was with all strongly typed languages, even PowerBasic, VisualBasic, and all the rest. I practically grew up in the Niklaus Wirth school of programming language design, which dictated that strong typing is the only way to prevent errors, manage large projects, maintain performance, and reverse hair-loss. But Pick Basic was a revelation to me. Yes, big screens are indispensable. Syntax-highlighting editors, n-tier architecture, RADs, code-formatters, and the rest are all very important (and, sadly, underutilized by too many Pick programmers), but the biggest difference of all lay in Pick Basic's (and, as I recall, TrueBasic's) largely typeless variables. This, along with Pick Basic's other unique features, like external functions and subroutines, free formatting (allowing insanity, yes, but also amazing lucidity), and integral database, all combine to make Pick Basic the best of all possible programming languages, AND Pick the best of all possible platforms (imho). You see, it turns out that it's really all about the simplicity and flexibility of the language, not its complexity and rigidity. All that, coupled with some code-structuring and event-driven programming tricks gleaned from VisualBasic, and I was cranking out the magic. (I've even written a graphical game: Reversi. Simple, but still graphical.) The typeless nature of Pick brought unexpected clarity to my code, accelerated development by an order of magnitude, and vastly improved readability and maintainability. My code just worked before; It's glorious now. I was hooked. I've occasionally wondered where I'd be today had I stayed with C++ and kept going down the Sybase/SQL/Oracle road I was already so far down, but never really enough to do anything serious about it. I love Pick. I REALLY love Pick. I used it for everything. I even write all my own home-brew, system-maintenance and utility tools in Pick Basic now, with only minor use of shell scripts. I know most long-time Pick types will cite its data-storage model, maybe even its dictionaries, as its main feature, or their favorite feature, but, in my eyes, none of those matter half as much as its native programming language: Pick Basic And, yes, I know Basic isn't really its 'native' language. That would be PROC. But PROC was wholly inadequate back then, and even moreso now. That's why it was eclipsed by Basic. And all those Luddites fiercely clinging to it need to 'let it go'. Or be let go. Let us speak no more of PROC. PROC must die. It belongs in the same grave with C, all C's many derivatives (PHP, Perl, Java, et al), and all other strongly-typed and/or bizarre, C-like languages. PERIOD! LONG LIVE BASIC! LONG LIVE PICK! P.S. What was the 'simple answer' I'd failed to see? Turn everything into strings! All your arrays become string arrays, and all your related data can go into its own, single, easy-to-load, easy-to-save, easy-to-parse, array. |